Pleased to share that the issue of the Annals of the History of Computing that Miriam Posner and I edited on “Logistical Histories of Computing” is now online, featuring wonderful contributions by Ingrid Burrington, Verónica Uribe del Águila, Andrew Lison, and Kyle Stine.

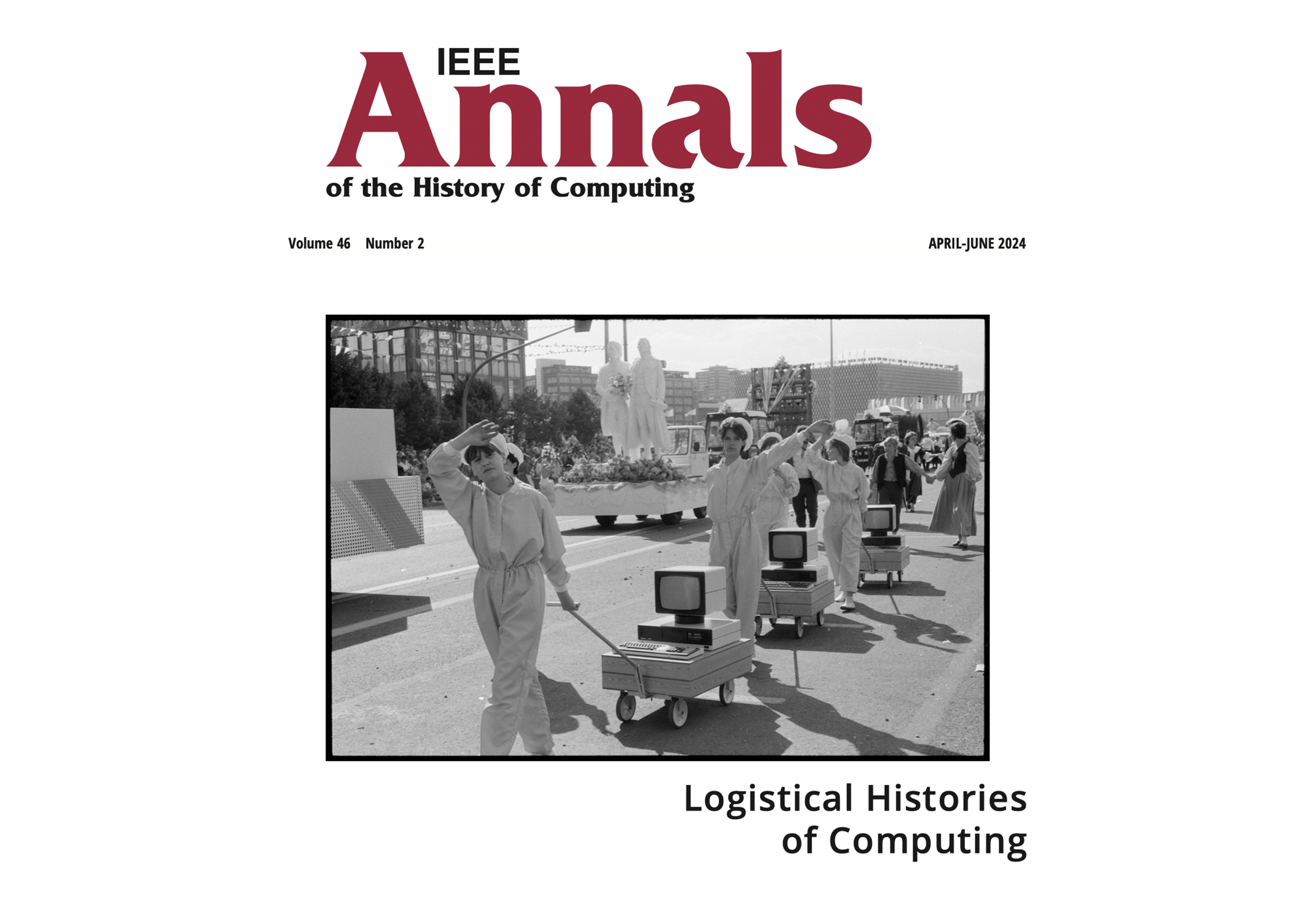

GDR delegation from Erfurt presenting Sömmerdan VEB Robotron PC 1715s (running Zilog Z80 clone U880 microprocessors) at a celebration for Berlin’s 750th anniversary (July 1987) (German Federal Archives, [BArch, Bild 183-1987-0704-077 / Uhlemann, Thomas])

Integrated Circuits

How does the global supply chain matter to the history of computing? It seems clear that it does matter—after all, the iPhone achieved its market dominance in part because of Apple’s world-leading supply chain. More recently, a global semiconductor shortage has stymied production of everything from Volkswagens to Xboxes. And of course, the systems organizing the sourcing, production, and distribution for nearly all of our products are designed and delivered by computer.1

Over the last half-century, the “lowly chip” has gone from “little-understood workhorse in powerful computers to the most crucial and expensive component under the hood of modern-day gadgets” and the proverbial center of our world.2 The history of production in the 20th century may have begun on the automotive assembly line, but it ended here—with the computer, its parts and processes spread throughout the world.

What makes the supply chain so important to this story is that no prior technology had required as complex and diverse an array of materials, expertise, and infrastructure as the personal computer. It is almost impossible to imagine its assembly apart from the extensive networks of subcontractors and suppliers it demanded, or outside the era of globalization and supply chain capitalism it inaugurated. But perhaps part of the difficulty in recognizing this relationship is that all too often it is the computer’s reception that is made social, not its production.

As the articles in this issue suggest, the supply chain was the computer’s first social network, enrolling all manner of geographies, all kinds of practices, and all sorts of people. This history of computing is not confined to the garage, the dorm, or the boardroom, but shared throughout the factories, ports, and warehouses along its path.3

But questions remain. What does it mean to talk about the supply chain of the computer, or even to study a supply chain? Like other instances of infrastructure, a supply chain is defined by its multiplicity. So is the object of our analysis specific locales, the information system in its entirety, or the patchwork of national and international laws that facilitate global exchange? And is it possible that supply chain management has a particular kinship with computing?

After all, many Cold War-era thinkers applied their methods equally to computing and logistics: RAND had a Logistics Laboratory4 5, Kenneth Arrow wrote a pioneering work on inventory control6, and Jay Forrester’s “bullwhip effect” continues to define information flow in the field of distribution.7 The roots of logistics, it would seem, were planted in the same soil that fostered the growth of computation.

The articles in this issue argue for the importance of a logistical history of computing by surfacing accounts of computers and components, from quartz crystals to semiconductors, as they were produced across logistical spaces like Malaysia, Mexico, and Manaus. While the impact of the global chip shortage may have brought public attention to these productive pathways, the turn to a critical study of logistics demands that we not only consider the varied circumstances of these networks, but the complex social patterns and environmental transformations their construction has entailed.

Examining the ways in which corporations and states exploited legal and economic peripheries, differences in gender, race, and class, and developed practices of outsourcing and offshoring, this issue argues for the central place of computers not only as products of global logistical operation, but as the very sociotechnical infrastructure through which it was constituted. Bringing the history of computing into dialog with the history of the supply chain prompts questions about the origin and evolution of computational technologies.

In some cases, it can show us how the ostensibly universal functions of technologies are in fact closely tied up with flows across global boundaries and borders. Andrew Lison demonstrates this capacity in his examination of the Zilog Z80 microprocessors: compatibility looks different and functions differently, he shows, depending on whether you are in the Global South or the North. Verónica Uribe del Águila, similarly, shows us that the nature of “innovation” has a great deal to do with neoliberal notions of market governance in Latin America.

The critical studies of logistics—what some call supply studies—is an emerging interdisciplinary interest, which enrolls work in cultural theory, geography, history, anthropology, and political economy to engage with global supply chains while continuing accounts of production and exchange developed within the history of technology and industrial development. “If the corporate goal is to build a supply chain so seamless that its existence barely registers with consumers,” Jackie Brown, writing on the global impact of supply chains, notes that this approach looks “to turn this process inside out, exposing the human and environmental costs obscured by slick design and packaging.” In investigating the “metals, refineries, factories, shipping containers, and warehouses” of global assembly, we attempt to “distill and make legible” the conditions that surround these networks.8 In doing so we recognize that the supply chain, at least as it has come to be understood, has both a troubling future and—as we will see—a complex and often problematic past.

A Hungry Machine

The computer has always been a global product, but the dependencies required for the enormous distances comprising it have been increasingly called into question. Even labor, the component historically cast as the easiest to shift from one geography to another, rests on an unsteady assemblage that includes machine tools, operational practices, and economic conditions. Over a decade ago, The New York Times reported on the difficulties Apple found in manufacturing even a single element of its product line in the United States. Despite Tim Cook’s 2012 announcement that the Mac Pro would soon be “assembled in the USA,” by American workers, even the smallest details faced difficulties, with the company struggling even to find screws.

The problem, Jack Nicas noted, was that “in China, Apple relied on factories that can produce vast quantities of custom screws on short notice.”9 Texas simply could not reproduce that network. “By the time the computer was ready for mass production,” he wrote, the company had finally managed to source the screws—but from China instead of the United States.9 In US political discourse the primary interpretation of stories like this was of economic reliance on a foreign country. But this neglects the fundamental nature of the supply chain.

Benjamin Bratton writes that there is “now a vast network of many billions of little Turing machines” that “intake and absorb the Earth’s chemistry in order to function.” This “stack,” he continues, “is a hungry machine,” and while “its curated population of algorithms may be all but massless,” their processing of “Earthly material” is a physical event, with “the range of possible translations between information and mechanical appetites” limited not only by mathematics but “by the real finitude of substances that can force communication between both sides of this encounter”.10 So while China may be an important node in the global supply chain, it cannot be the only one.

The computing supply chain—like all supply chains—is not a chain at all. It is a vast and densely interwoven web of companies, cultures, and countries. The integrated circuit, it seems, is itself the result of an integrated circuit, one just as complex and fragile as the sort soldered onto boards. But the limits of this global circuit can only be seen when it breaks down, rendering itself visible and—if only for a moment—open to investigation.11

These moments occur on the fault lines of the supply chain, chokepoints where pathways narrow and lines constrict. Sometimes they are accidental. When a fire spread through South Korean memory manufacturer SK Hynix’s production plant in Wuxi, China, the price of a 2-gigabit DRAM module jumped 20%, and industry analysts predicted severe impacts to smartphones and laptops within a month.12 Others can be traced back to natural disasters, sometimes amplified by the compounding effects of global climate change. The earthquake that was responsible for the Fukushima nuclear accident, for example, had widespread impacts across Japanese electronics manufacturing.

The 2011 Thailand floods caused severe disruption to the hard disk drive industry there (comprising between 25%–40% of global output), leading to a reduction in available hard drives and a corresponding increase in their cost—a circumstance partially responsible for accelerating the adoption of solid-state drives.13 That a single region in a single country was responsible for so much of a critical component for the industry is an inevitable result of the efficiency logic governing supply chains. As Joshua Romero put it, “this clustering of a highly specialized industry has become common in the global trend toward lean supply chains and just-in-time manufacturing” as companies look to save costs “by minimizing the components they store in inventory and minimizing their distance to suppliers.” While analysts predicted a reduction of up to 30% in worldwide hard disk availability, recovery was swifter than expected. But it was not cheap, with Western Digital putting their costs to rebuild in the “hundreds of millions of dollars.”14

As the chip shortage became an increasing concern during the COVID-19 pandemic, this form of logistical failure blurred into other supply shortages. The most familiar occurrence for consumers became the frequent delivery (through social networking sites like Facebook, Twitter, and Instagram) of images detailing a widespread lack of delivery—absent items, empty shelves, and delayed orders. Indeed, outside of those who work directly in the logistics industry, in the warehouses, factories, and ports of the global supply chain, this is the liminal site of supply—where we come face-to-face with all the logistical promises and perils of the modern age. But if these chips were absent from the store shelf, they were still everywhere to be found in the back of the store.

Joe Allen recalls that retail was once “considered a backwater of American capitalism,” with everyone from Thomas Edison to Peter Drucker lamenting its sorry state. Drucker famously remarked that distribution was “one of the most sadly neglected” and yet, “most promising” areas of American business.15 This changed in the 1970s and 1980s as computers came to colonize nearly all aspects of production, distribution, and consumption.

Beginning at IBM, prototypes of MRP—material requirements planning—systems were developed, first with the Production Information Control System (PICS) and then with its “Communication”-oriented successor (COPICS). In 1972, a group of five German engineers left IBM to start a company called SAP—today the leading logistical platform for managing all aspects of the supply chain. Warehouse inventories were computerized alongside factories, and technologies like barcodes brought the computer to consumers in line at the checkout.

More technologies—from satellite communication to GPS systems—radically altered the information chain that surrounded the movement of material goods. Every aspect of a product’s movement, from assembly line to store shelf, was now, at least to some degree, computerized. The result is that we can walk into an Apple store today and no sooner than we have left with a computer, phone, or tablet, the inventory system will be updated to schedule another box be placed on a shelf, another truck to leave the distribution center, or another night on the assembly line at Foxconn. This is true of both traditional retail companies like Walmart and digital storefronts like Amazon. And its precision has only increased.

One of the first things Tim Cook did when he joined Apple was to invest in “a state-of-the-art enterprise resource planning system from SAP that hooked directly into the IT systems at Apple’s parts suppliers.” The forecasting capabilities of the new system allowed Apple to slash inventory levels from 30 days to six.16

The computer, the networks that connect it and the logistical software systems that run on it, sits at the center of all modern supply chains. As Matthew Hockenberry suggests, “supply chains are defined as much by their communications networks and media technologies as they are by their containers and pallets.”17 Miriam Posner writes that with logistical software systems like SAP, businesses are able to “focus not on one single functional unit of a business at a time, such as distribution or marketing, but on the through line that connects each part of the product’s travel through the company, from idea to the customer’s hands.”18 In such as system, she explains, “a sense of inevitability takes hold”:

SAP’s built-in optimizers work out how to meet production needs with the least “latency” and at the lowest possible costs. (The software even suggests how tightly a container should be packed, to save on shipping charges.) This entails that particular components become available at particular times. The consequences of this relentless optimization are well-documented. The corporations that commission products pass their computationally determined demands on to their subcontractors, who then put extraordinary pressure on their employees.19 20

Reflecting on the MRP systems he developed at IBM, Richard Lilly records that one of the consequences of the severe memory limitations they encountered in the computer modeling of production was that bills of material had to be split into smaller and more discrete subassemblies in order to process them. The long-term implication, he observed, was that products themselves were increasingly broken into innumerable, minute subcomponents, almost ready-made for global subcontracting.21 Still, even if the logistical pathways the computer connected had begun as something of a side effect, we now live in the world they have built, so much so that it is difficult to think beyond it.

Matthew Hockenberry recalls that “logistical technologies have always been accompanied by new ways of seeing and listening, reading and knowing, thinking and moving—which have themselves catalyzed crucial shifts in our modes of communication.”17 The consequence is that we can only think production on the screen, and we can only organize distribution through the algorithm.

But the precondition for the computerized supply chain was the computer and that began with the construction of a closely coupled world. After all, computers, at least as we have made them, require a vast and varied set of materials from across the globe. Plastics, such as ABS, PVC, and PMMA; metals such as gold, silver, titanium, and tin; elements and minerals—both common and rare—from europium and yttrium, to silicon, lithium, tantalum, cobalt, even—as Ingrid Burrington writes, crystals made of quartz—have all become increasingly indispensable to the insatiable supply chain required for doing what Turing proved needed only a strip of tape and the means to mark it. These diverse materials substituted for the impossibility of his infinite storage.

But the world is not infinite either, and the costs of computing have become increasingly concerning in the face of the demands on our labor, our environment, and our humanity (see for example: 22 23 24 25 26). And despite its central place in programming our contemporary process of supply, the computer did not create all of these connections. Indeed, it emerged from them. In our logistical history we begin not with the computer’s assembly line, but its self-assembly. We find here a global marketplace, but not yet the software of the supply chain.

Assembly Required

The history of computing is a history of making, of different designs for different devices to be sourced, assembled, and sold—all at very different scales. Early computers were essentially artifactual productions—small in number and carefully constructed. They were built like cathedrals, in other words, rather than printed like bibles. This is not to say that they did not require materials—or making—but rather that their manufacture came at a scale altogether different from that of the personal computer, to say nothing of contemporary laptops, tablets, and smartphones.

As James Cortada notes, IBM sold only 120,000 1401 mainframes between 1959 and 1971. When IBM introduced the System/360 architecture, the company received 100,000 orders, though it struggled to fill them.27 By the time it introduced its personal computer, the IBM 5150, it sold 750,000 in the first two years.28

The kit is a central component in the history of the computer. Accounts of the early development of systems like the Altair or Apple I often romanticize this fact—the computer was a product developed by hobbyists for other hobbyists. The whole point of the purchase was to be able to build the thing yourself, to be able to learn, to customize, and to control the end result. But these accounts often minimize the logistical constraints that necessitated this form of delivery. These products were not quite yet products, and they were sold as kits because there was no means (or desire) to manufacture them. Alongside the invention of the personal computer, a workforce and assembly process had to be invented too. While there were predecessors—in the manufacture of electronics such as radios and calculators or in complex mechanical devices such as typewriters and sewing machines, there was nothing that quite captured the requirements for this new technology. Even companies well suited to the manufacture of computers and their components faced risks in altering lines to accommodate untested ideas.29 Hewlett-Packard famously passed on Steve Wozniak’s computer project—in part because it did not see the true market potential for it, but also because it was not equipped (materially and organizationally) to manufacture such a simple, unpackaged product.30

In our logistical history we begin not with the computer’s assembly line, but its self-assembly. We find here a global marketplace, but not yet the software of the supply chain. Still, the move to no—or at least less—assembly required came quickly. Paul Terrell’s Byte Shop notably paid Steve Jobs to deliver prebuilt computers instead of just a kit. As Laine Nooney writes, “Terrell was not interested in retailing a raw circuit board that hobbyists had to finish themselves.” Sensing that the “commercial market for microcomputers outstripped the narrow confines of Homebrew,” he saw that “what the customers coming into Byte Shop wanted was computing power without the labor of assembly.”31 This was part of a shift from kits for hobbyists to machines for “users,” but it was also a shift in the labor demands of assembly. The orders were completed with the help of Jobs’ “pregnant sister” Patty, who was paid one dollar a unit to “stuff“ chips onto the boards while she watched soap operas.31

In 1980 Carl Helmers, Byte magazine cofounder and editor, wrote of a new era of “off-the-shelf personal computers,” with his “‘ideal’ abstraction of a personal computer cast into a specific and eminently useful form as a mass-produced product.”32 But that mass-production had required a corresponding mass of people to serve as a workforce. And in contrast to the largely male, mostly white demographic of kit hobbyists, this mode of production was gendered and racialized in very different ways. Patty’s work on Apple’s informal assembly line was—in much the way Verónica Uribe del Águila argues CTS’s lean management experiment in Mexico was—something of a prototype. Women, especially women of color, were central to the production of the personal computer at scale.

Anna Tsing notes that “commodity chains based on subcontracting, outsourcing, and allied arrangement” are one of the fundamental features of “supply chain capitalism.” From almost the very beginning subcontracting was seen as essential to delivering machines, with outsourcing (to places like Singapore and Japan, Taiwan, and South Korea) close behind. Apple’s first CEO, Michael Scott, remarked that Apple’s business was in “designing, educating, and marketing.” A corollary followed: “let the subcontractors have the problems.” It was a sentiment that was also shared by their competitors.33 At Apple, Patty was replaced. First by Hildy Licht and her team of Silicon Valley “housewives,”3 and then by the General Technology Corporation. In 1983 GTC had 450 employees, and a workforce typical of other subcontracting firms:

Many are women who are black, Spanish-speaking, or members of other minority groups. They start working at minimum wage and are trained to insert the pronged chips into the circuit boards. The work is boring and the chances of upward mobility are dim for board-stuffers. Each employee signs a checklist after completing a board to indicate responsibility…then the board is tested, any mistakes are corrected, and out the door go the ‘guts’ of another…computer. A board may go to Apple’s Singapore assembly plant, where it joins the plastic case, the keyboard, and other components to be mounted on an aluminum base plate. It all fits together with ten screws, just as Woz designed it in 1976.33 (see also: 34 35)

Outsourcing was itself replaced by offshoring. Apple came to Singapore in 1981 to produce circuit boards for the Apple II. By 1985, Apple was doing final assembly of the Apple II there, and by 1990 Singapore was responsible for the design and manufacturing for all Asia-Pacific markets as well as boards, monitors, and peripherals for the global market. The trend toward outsourcing accelerated over the decade, with companies like Compaq, HP, and IBM moving their production to East Asian sites like Singapore, Taiwan, and South Korea.36 When Apple introduced the iMac, it handed production to LG—first with monitors in 1998, and then with the entire assembly the following year. Shortly after, Apple entered into an agreement with a company called Foxconn, and they, along with companies like Dell and IBM, began the move toward China as the global hub of computer production.37

Logistical Histories

The work in this special issue presents accounts of the computing supply chain across a range of geographical and historical sites. Each examines the relationship between computing’s raw materials and organizational structures as well as the underlying logistical concepts critical to contemporary supply chains. Ingrid Burrington, for example, explores the early manufacture of silicon chips, contrasting manufacturers’ claims that silicon comes from “ordinary sand” with the reality that silicon is made from high-purity quartz. “The invention of silicon chips did not rely on ordinary sand but on extraordinary crystals,” Burrington writes. In the first years of silicon production, Burrington explains, this ultrapurified quartz came from Brazil, where low-wage workers used hand tools to mine quartz in a largely unregulated market. When manufacturers popularized a misunderstanding of silicon’s origins, Burrington writes, they contributed to a vision of plenitude and profusion that continues to underlie our understanding of computational supply chains. In reality, silicon’s source material is scarce, labor-intensive, and dependent on the structural inequality that encourages laborers to work for low wages in an unregulated industry.

In her contribution, Verónica Uribe del Águila tells the story of El Centro de Tecnología de Semiconductores (CTS), a cluster of businesses and laboratories established in a partnership between the Mexican federal government and IBM. Describing the contemporary Mexican context for work, innovation, and technology, she shows how the country’s approach to trade evolved from protectionism to a faith in the free market. CTS was a center of innovation, Uribe del Águila explains, but it also established a neoliberal model of outsourced production and “lean” business practices that weakened workers’ rights.

“We…might think of computing industry logistical trends as oscillating between alternating, if overlapping, waves of consolidation and fragmentation,” writes Andrew Lison in his examination of the Zilog Z80 microprocessor. Lison follows the Z80 into Brazilian recording studios and East German game arcades, showing how the hardware’s interoperability gave rise to a wide array of incompatible implementations (and imitators). “The logistics of the Z80’s production and proliferation, including its illicit appropriation behind the Iron Curtain, can therefore offer a deeper understanding of computational interoperability and its limitations prior to the widespread adoption of the Internet,” writes Lison.

Kyle Stine expands our understanding of globalization by explaining how the Signetics Corporation decided where and how it would build its offshore locations. Signetics considered not only cheap labor, Stine explains, but also how its facilities would fit into existing logistical and infrastructural networks. Signetics executives wanted to ensure that workers would have housing, that its plants would have adequate and reliable power, and that its facilities would be served by efficient and reliable distribution infrastructure. Stine intervenes in histories of globalization that focus either on the top (corporate executives) or the bottom (the lives of workers) of the process, arguing for a meso-level of historical analysis that can be located in companies’ logistical decision making. In basing its decision on the holistic factors affecting its human workforce, Stine argues, Signetics was interested in “the logistics of life itself.”

References

-

M. Posner, “The software at our doorsteps,” IEEE Ann. Hist. Comput., vol. 44, no. 1, pp. 133–134, Jan.–Mar. 2022. ↩

-

I. King, D. Wu, and D. Pogkas, “How a chip shortage snarled everything from phones to cars,” Bloomberg, Mar. 2021. ↩

-

M. Hockenberry, “On logistical histories of computing,” IEEE Ann. Hist. Comput., vol. 44, no. 1, pp. 135–136, Jan.–Mar. 2022. ↩ ↩2

-

M. A. Geisler, A Personal History of Logistics. Tysons, VA, USA: Logistics Management Inst., 1986. ↩

-

J. Klein, “Implementation rationality: The nexus of psychology and economics at the RAND Logistics Systems Laboratory, 1956–1966,” Hist. Political Econ., vol. 48, no. 1, pp. 198–225, 2016. ↩

-

K. K. Arrow, T. Harris, and J. Marschak, “Optimal inventory policy,” Econometrica, vol. 19, no. 3, pp. 250–272, Jul. 1951. ↩

-

J. Forrester, Industrial Dynamics. Waltham, MA, USA: Pegasus Communications, 1961. ↩

-

J. Brown, “Source material,” Real Life, Mar. 2021. ↩

-

J. Nicas, “A tiny screw shows why iPhones won’t be ‘assembled in U.S.A.,’” New York Times, Jan. 2019. ↩ ↩2

-

B. Bratton, The Stack: On Software and Sovereignty. Cambridge, MA, USA: MIT Press, 2015. ↩

-

S. L. Star, “The ethnography of infrastructure,” Amer. Behav. Scientist, vol. 43, no. 3, pp. 377–391, 1999. ↩

-

L. Mirani, “How a little-noticed factory fire disrupted the global electronics supply,” Quartz, Sep. 2013. ↩

-

C. Arthur, “Thailand’s devastating floods are hitting PC hard drive supplies, warn analysts,” The Guardian, Oct. 2011. ↩

-

J. Romero, “The lessons of Thailand’s flood,” IEEE Spectr., vol. 49, no. 11, pp. 11–12, Nov. 2012. ↩

-

J. Allen, “Studying logistics,” Jacobin, Feb. 2015. ↩

-

L. Kahney, Tim Cook: The Genius Who Took Apple to the Next Level. Baltimore, MD, USA: Penguin, 2019. ↩

-

M. Hockenberry, N. Starosielski, and S. Zieger, Assembly Codes. The Logistics of Media. Durham, NC, USA: Duke Univ. Press, 2021. ↩ ↩2

-

M. Posner, “Breakpoints and black boxes: Information in global supply chains,” Postmodern Culture, vol. 31, no. 3, 2012. ↩

-

M. Posner, “The software that shapes workers’ lives,” The New Yorker, Mar. 2019. ↩

-

N. Rossiter, Software, Infrastructure, Labor: A Media Theory of Logistical Nightmares. Evanston, IL, USA: Routledge, 2016. ↩

-

R. Lilly, The Road to Manufacturing Success. Boca Raton, FL, USA: St. Lucie Press, 2001. ↩

-

S. Bologna, “Inside logistics: Organization, work, distinctions,” Viewpoint Mag., no. 4, Sep./Oct. 2014. ↩

-

J. Chan, P. Ngai, and M. Selden, “The politics of global production: Apple, Foxconn, and China’s new working class,” New Technol., Work Employment, vol. 28, no. 2, pp. 100–115, Jul. 2013. ↩

-

M. Danyluk, “Capital’s logistical fix: Accumulation, globalization, and the survival of capitalism,” in C. Chua, M. Danyluk, D. Cowen, and L. Khalili, “Turbulent circulation: Building a critical engagement with logistics,” Soc. Space, vol. 36, no. 4, Aug. 2018. ↩

-

S. Taffel, “AirPods and the Earth: Digital technologies, planned obsolescence and the Capitalocene,” Environ. Plan. E, Nature Space, vol. 6, no. 1, Jan. 2022. ↩

-

A. Williams, M. Miceli, and T. Gebru, “The exploited labor behind artificial intelligence,” Noema, Oct. 2022. ↩

-

J. Cortada, “Building the system/360 mainframe nearly destroyed IBM,” IEEE Spectrum, Apr. 2019. ↩

-

IBM, “The PS/2,” Accessed: Mar. 2024. [Online]. Available: https://www.ibm.com/history/ps-2 ↩

-

T. Sasaki, Oral History Conducted By William Aspray. Piscataway, NJ, USA: IEEE History Center, 1994. ↩

-

S. Wozniak and G. Smith, Iwoz: Computer Geek to Cult Icon, How I Invented the Personal Computer, Co-Founded Apple, and Had Fun Doing It. New York, NY, USA: Norton, 2006. ↩

-

L. Nooney, The Apple II Age: How the Computer Became Personal. Chicago, IL, USA: Chicago Univ. Press, 2023. ↩ ↩2

-

C. Helmers, “The era of off-the-shelf personal computers has arrived,” Byte, vol. 5, no. 1, Jan. 1980. ↩

-

E. M. Rogers and J. K. Larsen, Silicon Valley Fever: Growth of High-Technology Culture. New York, NY, USA: Basic Books, 1984. ↩ ↩2

-

M. Moritz, The Little Kingdom: The Private Story of Apple Computer. New York, NY, USA: William Morrow, 1984. ↩

-

“Assembling micros: They will sell no apple before its time,” Infoworld, Mar. 1982. ↩

-

J. Dedrick and K. L. Kraemer, Asia’s Computer Challenge: Threat or Opportunity for the United States and the World?. London, U.K.: Oxford Univ. Press, 1998. ↩

-

L. Kahney, Jony Ive: The Genius Behind Apple’s Greatest Products. Baltimore, MD, USA: Penguin, 2013. ↩